How to Read the Data From Sql Using Hadoop for Machine Learning

Concur on! Await a minute and think before you join the race and go a Hadoop Bedlamite. Hadoop has been the buzz discussion in the IT industry for some fourth dimension at present. Everyone seems to be in a rush to learn, implement and adopt Hadoop. And why should they not? The Information technology manufacture is all about change. Yous will not like to be left backside while others leverage Hadoop. However, but learning Hadoop is not enough. What most of the people overlook, which according to me, is the most of import attribute i.e. "When to use and when not to use Hadoop"

Resources to Refer:

- five Reasons to Learn Hadoop

- All You Need To Know Nigh Hadoop

- 10 Reasons Why Big Data Analytics is the All-time Career Move

- Interested in Big data and Hadoop – Check out the Curriculum

You lot may also go through this recording of this video where our Hadoop Grooming experts take explained the topics in a detailed mode with examples.

In this web log you will sympathise diverse scenarios where using Hadoop directly is non the all-time selection simply can be of benefit using Manufacture accepted means. Also, you will empathise scenarios where Hadoop should be the first choice. Every bit your fourth dimension is way as well valuable for me to waste, I shall now start with the subject of discussion of this blog.

Download Hadoop Installation Guide

First, we volition encounter the scenarios/situations when Hadoop should not exist used directly!

When Not To Use Hadoop

# 1. Real Time Analytics

If you want to do some Real Time Analytics, where you are expecting result chop-chop, Hadoop should non be used direct. Information technology is because Hadoop works on batch processing, hence response fourth dimension is loftier.

The diagram below explains how processing is done using MapReduce in Hadoop.

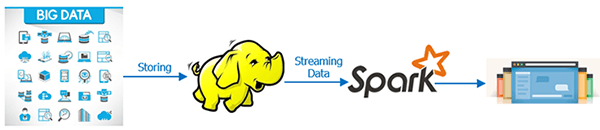

Real Time Analytics – Industry Accepted Mode

Since Hadoop cannot be used for real fourth dimension analytics, people explored and developed a new way in which they can use the forcefulness of Hadoop (HDFS) and brand the processing real time. So, the industry accepted way is to shop the Big Data in HDFS and mount Spark over it. By using spark the processing can be done in real time and in a wink (real quick).

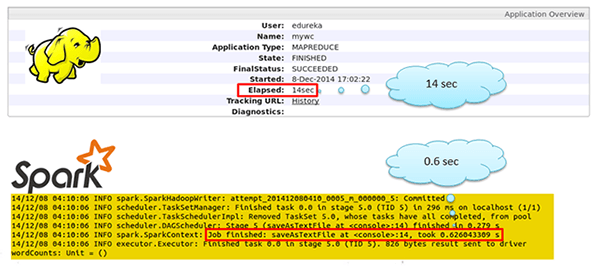

For the record, Spark is said to be 100 times faster than Hadoop. Oh yeah, I said 100 times faster it is non a typo. The diagram below shows the comparison between MapReduce processing and processing using Spark

I took a dataset and executed a line processing lawmaking written in Mapreduce and Spark, one by ane. On keeping the metrics like size of the dataset, logic etc abiding for both technologies, then below was the fourth dimension taken by MapReduce and Spark respectively.

- MapReduce – 14 sec

- Spark – 0.6 sec

This is a adept difference. All the same, good is not good enough. To achieve the best operation of Spark we have to take a few more measures like fine-tuning the cluster etc.

[buttonleads form_title="Download Installation Guide" redirect_url=https://edureka.wistia.com/medias/kkjhpq0a3h/download?media_file_id=67707771 course_id=166 button_text="Download Spark Installation Guide"]

Resource to Refer:

- Hive & Yarn Become Electrified By Spark

- Apache Spark With Hadoop – Why it Matters?

- Interested in Spark – Encounter its Content

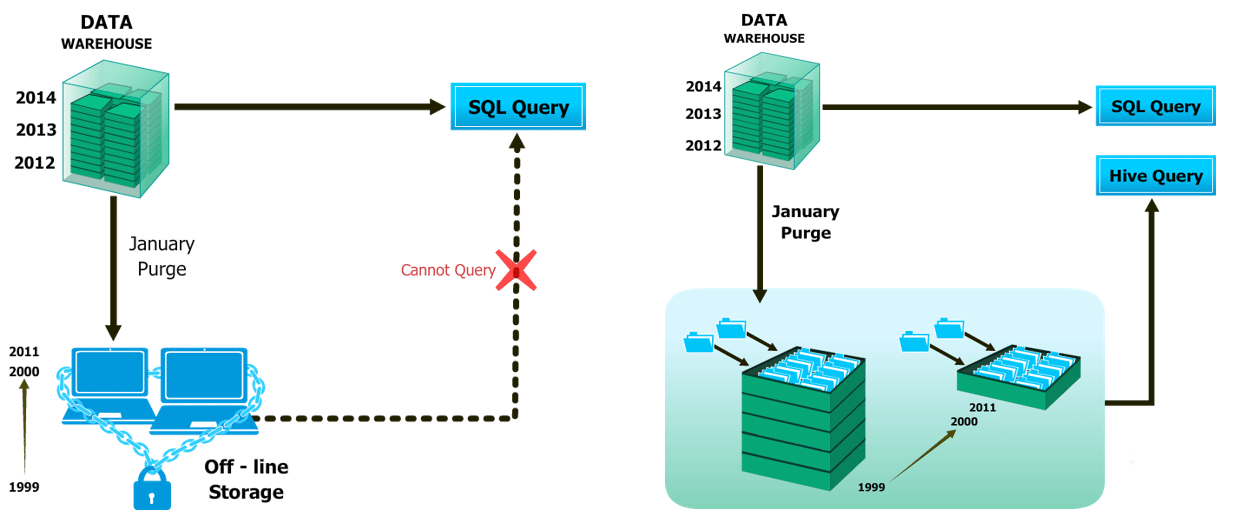

# 2. Not a Replacement for Existing Infrastructure

Hadoop is not a replacement for your existing data processing infrastructure. However, you can use Hadoop forth with it.

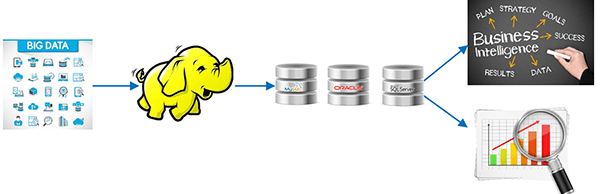

Industry accustomed way:

All the historical big data can be stored in Hadoop HDFS and it can be processed and transformed into a structured manageable information. Later on processing the data in Hadoop you lot demand to send the output to relational database technologies for BI, determination back up, reporting etc.

The diagram below volition make this clearer to you and this is an industry-accepted manner.

Discussion to the wise:

- Hadoop is not going to replace your database, but your database isn't probable to supervene upon Hadoop either.

- Dissimilar tools for different jobs, every bit simple as that.

Resource to Refer:

- vii Ways Big Data Grooming Can Change Your Arrangement

- Oracle – HDFS Using Sqoop

# 3. Multiple Smaller Datasets

Hadoop framework is not recommended for small-structured datasets every bit you have other tools bachelor in marketplace which can do this piece of work quite hands and at a fast pace than Hadoop similar MS Excel, RDBMS etc. For a small information analytics, Hadoop can exist costlier than other tools.

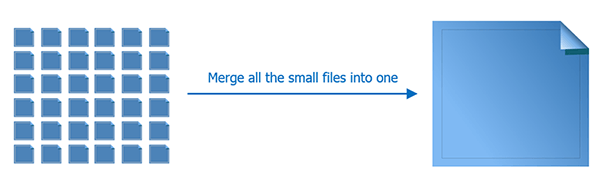

Industry Accepted Style:

We are smart people. We always find a amend way. In this example, since all the small files (for case, Server daily logs ) is of the same format, structure and the processing to be done on them is same, nosotros can merge all the small files into one large file then finally run our MapReduce program on it.

In order to testify the above theory, we carried out a modest experiment.

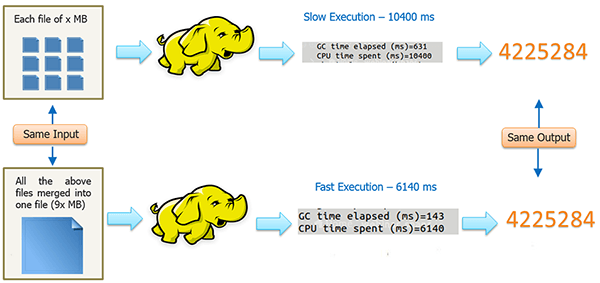

The diagram beneath explains the aforementioned:

We took 9 files of x mb each. Since these files were pocket-size nosotros merged them into ane large file. The entire size was 9x mb. (Pretty simple math: nine * 10 mb = 9x mb )

Finally, we wrote a MapReduce code and executed it twice.

- First execution (input as small files):

- Input data: 9 files each of ten mb each

- Output: 4225284 records

- Time taken: 10400 ms

- Second execution (input every bit one big file):

- Input information: 1 files each of 9x mb

- Output: 4225284 records

- Fourth dimension taken: 6140 ms

So every bit you can run across the second execution took lesser time than the starting time i. Hence, it proves the point.

Resource to Refer:

- Map-Reduce Design Patterns Grade

# 4. Novice Hadoopers

Unless you have a better understanding of the Hadoop framework, it's not suggested to use Hadoop for product. Hadoop is a technology which should come with a disclaimer: "Handle with care". You should know it before y'all employ information technology or else you will end upwardly like the kid below.

Learning Hadoop and its eco-organization tools and deciding which technology suits your need is once again a different level of complexity

Resources to Refer:

- Hadoop Developer Job Responsibility & Skills

- 7 Means How Big Information Training Can Change Your Organization

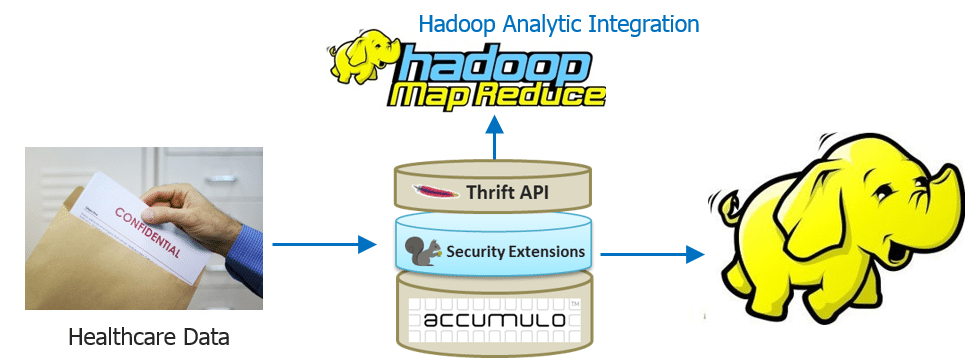

# v. Where Security is the main Concern?

Many enterprises — specially within highly regulated industries dealing with sensitive information — aren't able to move as quickly as they would like towards implementing Big Data projects and Hadoop.

Industry-Accustomed way

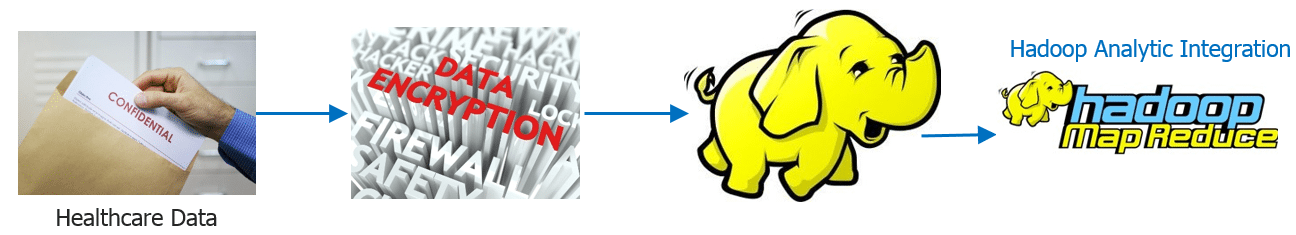

In that location are multiple ways to ensure that your sensitive information is secure with the elephant (Hadoop).

- Encrypt your data while moving to Hadoop. Yous can easily write a MapReduce program using any encryption Algorithm which encrypts the information and stores it in HDFS.

Finally, you apply the data for further MapReduce processing to become relevant insights.

The other fashion that I know and have used is using Apache Accumulo on height of Hadoop. Apache Accumulo is sorted, distributed central/value store is a robust, scalable, high performance data storage and retrieval system.

Ref: https://accumulo.apache.org/

What they missed to mention in the definition that it implements a security machinery known as cell-level security and hence it emerges as a adept selection where security is a business organization.

Resources to Refer:

- Implementing R and Hadoop in Banking Domain

When To Apply Hadoop

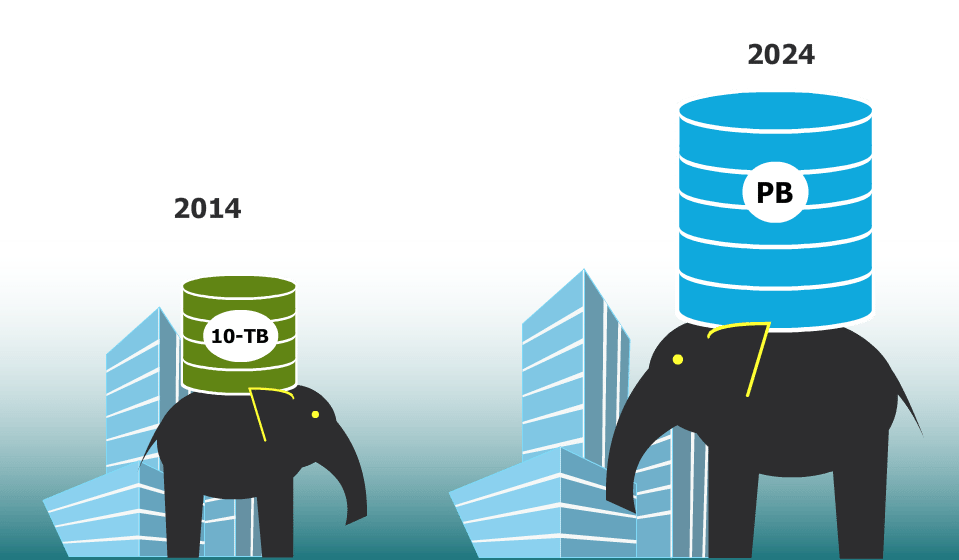

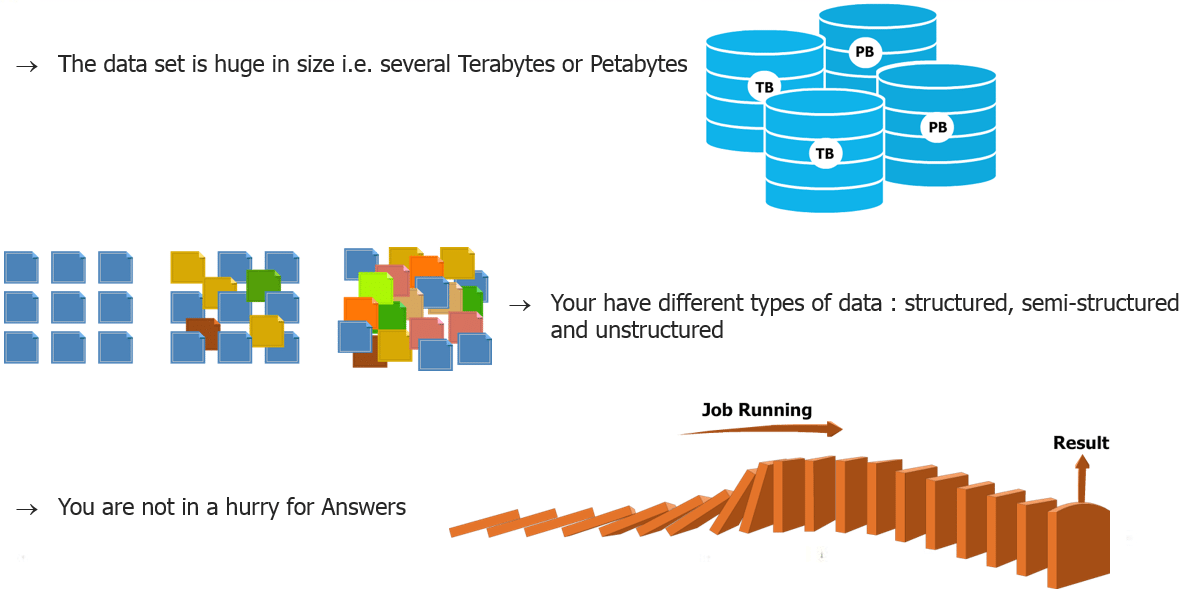

# 1. Data Size and Data Diversity

When you lot are dealing with huge volumes of information coming from various sources and in a diversity of formats so yous can say that you are dealing with Big Data. In this case, Hadoop is the right engineering science for you.

Resources to Refer:

- Game Irresolute Big Information Apply-Cases

# 2. Future Planning

It is all almost getting ready for challenges you may face in future. If you conceptualize Hadoop as a futurity need then y'all should program accordingly. To implement Hadoop on you data you should first sympathise the level of complexity of data and the rate with which it is going to grow. And so, y'all need a cluster planning. Information technology may begin with edifice a pocket-size or medium cluster in your industry as per data (in GBs or few TBs ) bachelor at nowadays and scale up your cluster in future depending on the growth of your information.

Resources to Refer:

- 10 Reasons why Big Data Analytics is the Best Career Move

-

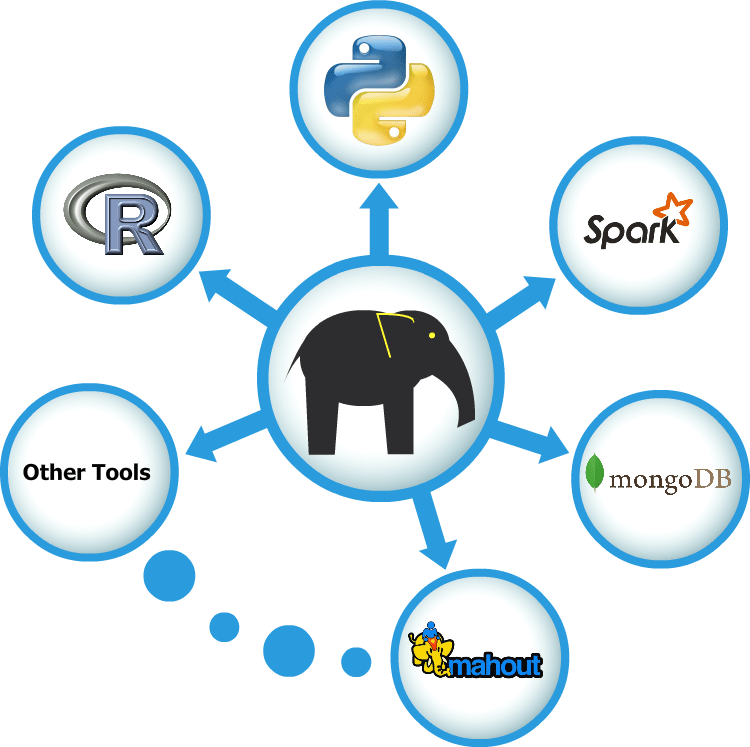

# 3. Multiple Frameworks for Big Data

In that location are various tools for various purposes. Hadoop can be integrated with multiple analytic tools to get the best out of it, like Mahout for Machine-Learning, R and Python for Analytics and visualization, Python, Spark for real time processing, MongoDB and Hbase for Nosql database, Pentaho for BI etc.

I volition non exist showing the integration in this blog but will show them in the Hadoop Integration series. I am already excited about it and I hope you experience the same.

Resource to Refer:

- Check Out Machine Learning with Mahout Course

- Bank check Out Business Analytics with R Course

- Python for Analytics Course

- MongoDB : Introduction to NoSQL Database

- Overview of HBase Storage Compages

- MongoDB vs HBase vs Cassandra

# iv. Lifetime Data Availability

When you want your information to be live and running forever, information technology can exist achieved using Hadoop'southward scalability. There is no limit to the size of cluster that you can have. You can increase the size anytime as per your need by adding datanodes to it with minimal cost.

The lesser line is to utilize the correct engineering as per your need.

Got a question for us? Please mention it in the comments section and we will become back to you lot.

Related Posts:

All you lot need to know nearly Hadoop

Get Started with Large Data & Hadoop

Source: https://www.edureka.co/blog/5-reasons-when-to-use-and-not-to-use-hadoop/

0 Response to "How to Read the Data From Sql Using Hadoop for Machine Learning"

Post a Comment